The Climate #8: Playing with fire

Can our forest ecosystems survive a hotter, drier climate?

Large language model AIs like ChatGPT and Copilot: a force for good or rat poison for the free internet?

I am amazed at how quickly big tech has crappified my day-to-day digital life with large language model Artificial Intelligence (AI LLMs).

First came the loss of quick, accurate search for a specific email. Then came clicking through a couple of pages of AI generated crap just to get to my email or file folders. Finally came the subscription price hike for these day to day work tools; without notice and without asking, just because they had bolted LLMs into everything.

Solution? I ended my subscription with that tech giant and now exclusively use open source tools where I can assert some control over when and if I use LLMs for tasks where they prove useful.

Don’t get me wrong. AI LLMs are useful. My clinical colleagues love them for summarising encounters with patients, saving hours per day. Executive and Board/Committee assistants are pumping out agendas, action lists and meeting minutes from meeting transcripts in a fraction of the time. Real time, verbalised, LLM translation is like the invention of the laser. A solution likely to solve many problems.

However I am concerned about the most common use of LLMs I observe within work and social spheres: the use of LLMs to search for information unfamiliar to the searcher. These sloppy searches - and their re-publication - is leading to the uncritical, amplified, circulation of poor quality information, that is, AI slop, across our feeds and screens.

A quiz night illustration

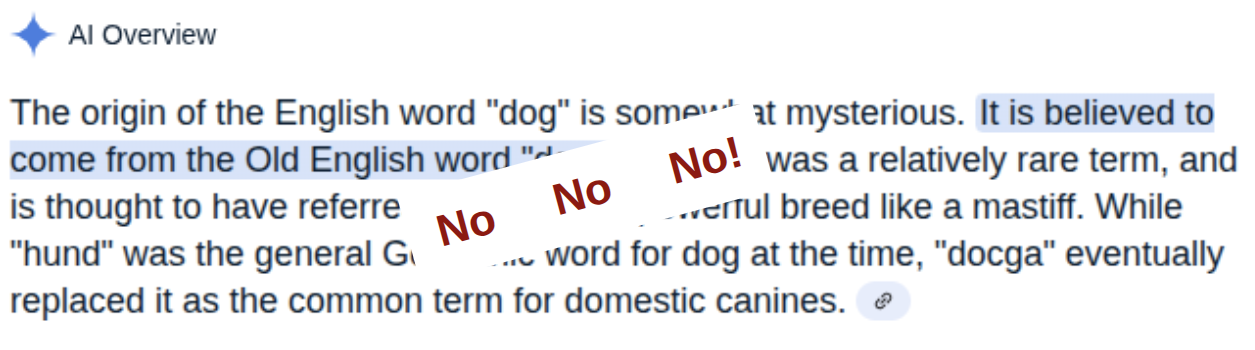

The etymology of the English word ‘dog’ is unknown. As the Oxford English Dictionary (OED) explains, no similar words have been identified with a meaning at all close to that of the English word, and all attempted etymological explanations are extremely speculative.

However, to click through to an authority like the OED, I - like you with every search you are doing now - have to scroll past the AI slop ‘summary’ vomited up by the search engine’s AI LLM at the top of search results.

Disturbingly, it doesn’t matter which search engine is used. Google, Bing, Duck Duck Go, Brave, all spew out similar, fallacious, AI summaries.

This isn’t all that surprising. ALL the big tech AI LLMs, such as GPT4, Anthropic, LLama and Gemini are trained on much the same training data (ie the totality of the public internet) and work the same way.*

Perhaps no civilisation-ending decision will be made based on slop served up by LLMs concerning the etymology of ‘dog’. But what about our time poor politicians and their even more hurried communications teams, their policy analysts and researchers? Such folk are using these tools to ‘assist’ what they are calling ‘research’ many times a day.

As uninformed (re)searchers they can’t be expected to easily distinguish fact from AI slop.

Unfortunately, the assertive prose styling of AI summaries gives little reason to doubt or explore behind the sloppy AI summary. AI LLMs never say ‘I don’t know’ to an online search question. Instead, the prose is styled to foster confidence in the AI’s capabilities. Even though there are innumerable instances of LLMs simply making things up instead of saying what they should say - that is - ‘I dunno’

The information cornucopia is over

News and information services depend on us humans clicking through to their web pages from online search results. Such information services, be they as small as Crikey or as large as News Corp need our eyeballs exposed to paid adverts or to paywall prompts to pay subscriptions. These are major sources of website funding.

Click-through rates to primary sources from search result lists have plummeted. Sometimes by more than two thirds, since the introduction of AI summaries at the top of search result lists. This means falling revenue for website publishers. Ultimately, these revenue reductions will impact staff budgets; meaning deep cuts to high quality information publishing.

Two additional AI driven trends are hastening the decline of publicly available information quality online:

Firstly, paywalls are going up and becoming more expensive as news and information providers try to cope with lower click-throughs. Paywalls also retard AI LLMs gorging on website content for free. Instead, publishers are demanding compensation from the big tech companies seeking access to their high quality AI model training data.

Secondly, into the void created by this loss of ‘free’, reasonable quality information, a flood of misinformation swill is being generated by actors using AI LLMs to create convincing fake pictures, videos, audio and text reports.

A point of distinction here - AI slop is just LLMs doing what they are trained to do. Misinformation swill is when these AI tools are used to deliberately misinform.

The slop to swill feedback loop

Finally, as more and more online content is AI generated misinformation swill rather than human sourced quality information, the AI LLMs are beginning to cannibalise themselves.

The replacement of human interaction on the internet by automated AI agents peddling misinformation swill, as predicted by dead internet theory, is happening in front of our eyes - albeit without the need for an actual human conspiracy to drive it.

Misinformation is already routinely promoted above real information by the big tech algorithms in social media feeds and internet search results. The LLM-slop search engine summaries are consequently biased towards misinformation.

We are in the midst of an internet information death spiral. It isn’t just X.com that is being destroyed as a reasonable information source.

A real - as well as virtual - world ecological disaster

From a green perspective, it would be remiss not to remind readers that this virtual ecosystem destruction is mirrored by an AI data centre building frenzy with real world ecocidal impacts. The diversion of energy, fresh water and other resources to data centres rather than real world uses, such as growing food or providing safe drinking water, is manifestly insane.

US data centre energy consumption is projected to grow to about 10% of total US energy production by the end of the decade. These energy hungry centres already consume over 30% of Irish energy production.

Do we continue allowing AI slop to be generated when we search online, when generating a sloppy summary consumes 3-4 times as much energy as a non-AI search does? Or do we want to curb greenhouse emissions and conserve energy, freshwater and other natural resources for more meaningful uses?

In conclusion

Humankind has always been fascinated and fearful of AI’s potential impact upon us. The emergence of LLMs over the last couple of years demonstrates that we don’t need the superpowers of general artificial intelligence to experience problems as we implement AI.

The LLMs are just doing what they are trained to do. It is us that has allowed the unconstrained publication of their slop internet search summaries and the misinformation swill they are summarising.

So it is up to us to set matters right. We may not be able to go back to the days of Altavista and Netscape. But we can implement policies and regulation to curb the tsunami of slop and swill destroying what was our information cornucopia - the public internet. We have that choice.

Perhaps also, we could spend more time in the real world; interacting with real world sources of information. These include ‘other people’ and ‘books’. I understand both can be found in such places as libraries and bookshops, as the video linked below illustrates.

The Bookshop Sketch (source)

_____________________________________

*Somewhat over simplified... AI LLMs generally form text by cobbling strings of word concepts together one after the other in the order they typically follow each other - based on what the internet tells them. They are not doing any ‘thinking’ as such.

Subscribe to thisnannuplife.net FOR FREE to join the conversation.

Already a member? Just enter your email below to get your log in link.